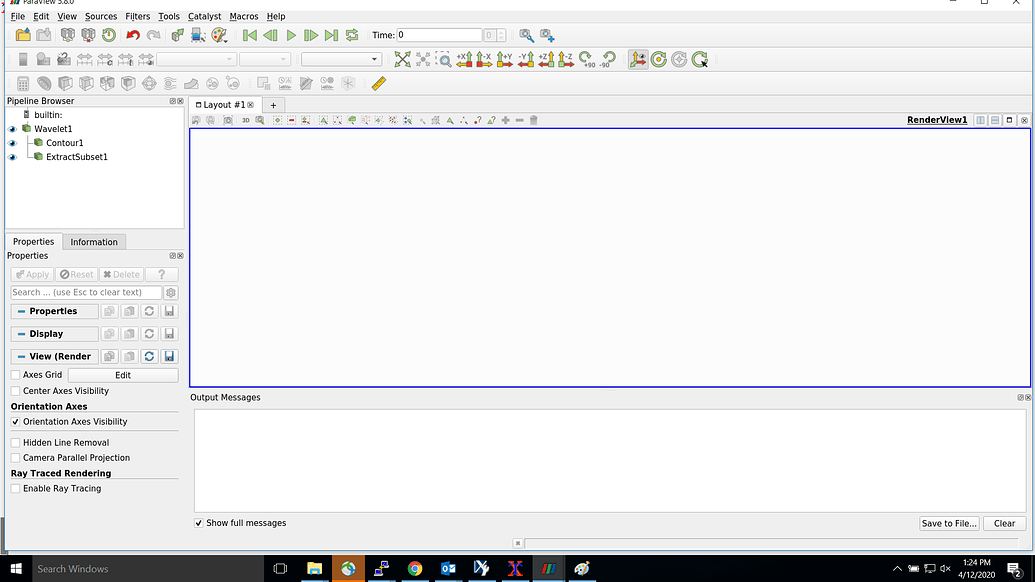

Have i affected the right file? pvserver -V returns (tbh i barely know what im doing in this space, its not my area of expertise, and the client seem unable to build it themselves.) I still have the same problem where multiple instances of paraview are started but only one succeeds (one on each node binds to the port). In both files i have set _withMPI to trueĪnd rebuild paraview (after running. I hope i affected the correct files, i found two spec files that related to Paraview So after a bit of research i find i need to explicitly enable MPI support. (slightly cut down, but 1 instance succeeds on each node, all other instances fail) However, i get the following output from my torque job that i use to submit the above Mpirun -env I_MPI_DEBUG 5 -ppn 8 -n 16 -hosts node0,node1 -env I_MPI_FABRICS=shm:dapl -env I_MPI_DAPL_PROVIDER=ofa-v2-ib0 -env I_MPI_DYNAMIC_CONNECTION=0 pvserver -display :0.0 I tried running Paraview in parallel using the command

I have successfully build OpenFoam Ext 3.2, including the Third Party applications. Hi I guess this is a better place to ask about Paraview and OpenFoam-Ext, perhaps i'll get better luck here Paraview with MPI Support - CFD Online Discussion Forums vts files and the vtkXMLPStructuredGridWriter class writes the. In this example, we have two processes which create their own.

PARAVIEW MPI CODE

The following code uses MPI and VTK to write parallel structured grid files. The goal of the project is to develop scalable parallel processing tools with an emphasis on distributed memory implementations. pvts files for a structured grid, very similar to the case you are looking for. ParaView is funded by the US Department of Energy ASCI Views program as part of a 3-year contract awarded to Kitware, Inc., by a consortium of three National Labs - Los Alamos, Sandia, and Livermore. So I decided to write the simplest code to generate *.vts and. Unfortunately there is no example for vtkXMLPStructuredGridWriter class ( VTK Classes not used in the Examples).

PARAVIEW MPI HOW TO

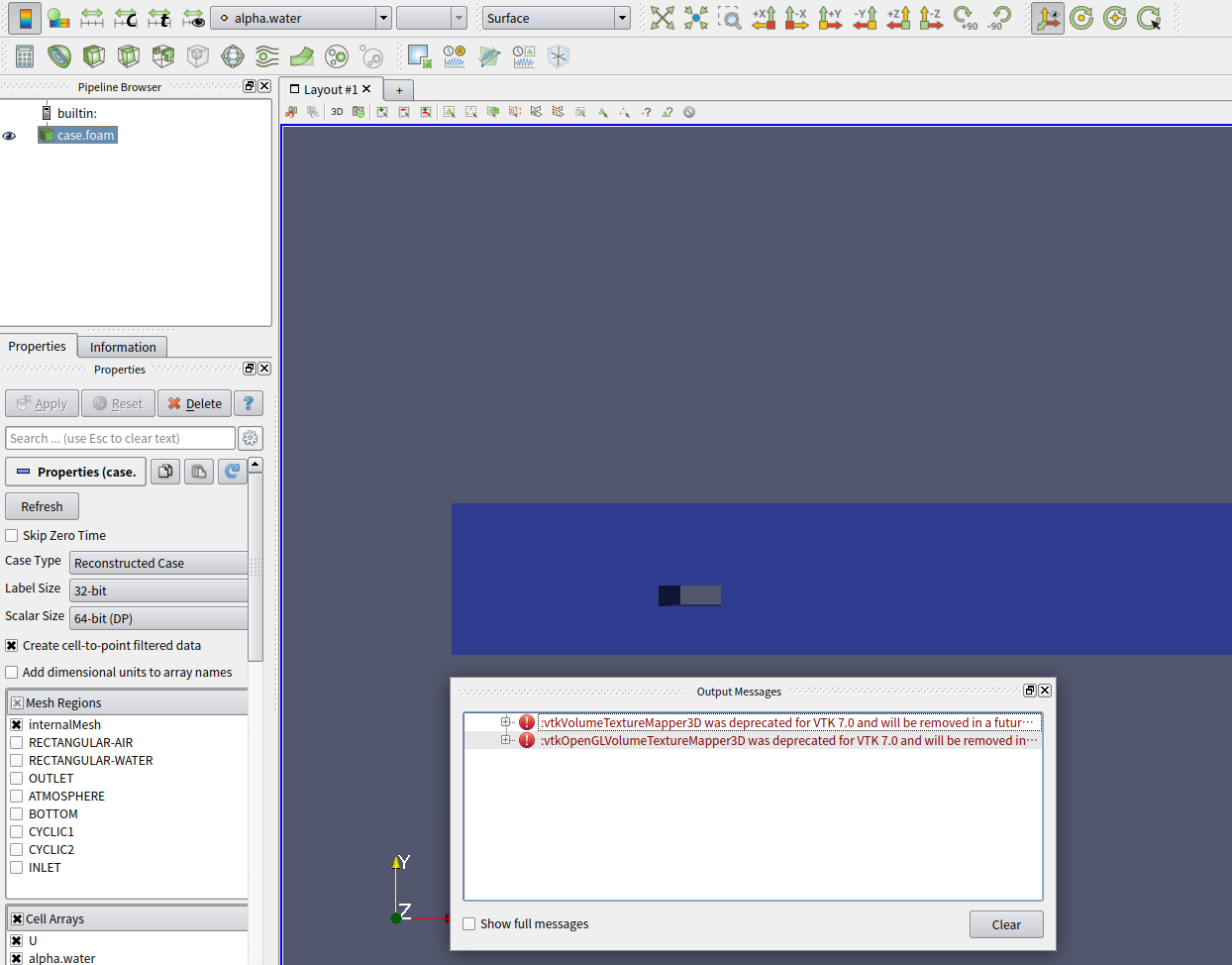

What may be the problem? Is it possible to use legacy VTK files for this tasks? May I be doing something totally wrong? I really don't know how to accomplish this task and the resources I find in google are very limited. I have a screenshot attached to make things more clear: Having that file, which I feel should work correctly (note that there are not parameters yet, just plain geometry information), my domain ranges are totally messed up, although each *.vts file works fine on its own. I'm having the following *.pvts file, which includes a ghost layer and: Unfortunately I'm facing many issues with that since I feel ParaView and VTK are extremely poorly documented and the error messages from ParaView are totally useless. pvts file that collects the *.vts files and combines the files containing the subdomains to a single file that ParaView can treat as whole domain. Every rank writes it's result to a file called lbm_out_.vts in a VTK StructuredGrid format.Each dimension is distributed to 2 ranks, i.e.I have a cube with length 8 (number of elements for each direction) => 8x8x8 = 512 elements.To be more specific about what I am trying to achieve: Each MPI rank is producing a VTK file for each timestep and since I'm using ParaView I want to visualize the whole thing as one cube. For a Lattice Boltzmann simulation of a lid-driven cavity (CFD) I'm decomposing my cubic domain into (also cubic) 8 subdomains, which are computed independently by 8 ranks.

0 kommentar(er)

0 kommentar(er)